ibis.connect URL format

Snowflake

Install

Install Ibis and dependencies for the Snowflake backend:

Install with the snowflake extra:

pip install 'ibis-framework[snowflake]'And connect:

import ibis

con = ibis.snowflake.connect()- 1

- Adjust connection parameters as needed.

Install for Snowflake:

conda install -c conda-forge ibis-snowflakeAnd connect:

import ibis

con = ibis.snowflake.connect()- 1

- Adjust connection parameters as needed.

Install for Snowflake:

mamba install -c conda-forge ibis-snowflakeAnd connect:

import ibis

con = ibis.snowflake.connect()- 1

- Adjust connection parameters as needed.

Connect

ibis.snowflake.connect

con = ibis.snowflake.connect(

user="user",

password="password",

account="safpqpq-sq55555",

database="my_database",

schema="my_schema",

)ibis.snowflake.connect is a thin wrapper around ibis.backends.snowflake.Backend.do_connect.

Connection Parameters

do_connect

do_connect(self, create_object_udfs=True, **kwargs)

Connect to Snowflake.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| user | Username | required | |

| account | A Snowflake organization ID and a Snowflake user ID, separated by a hyphen. Note that a Snowflake user ID is a separate identifier from a username. See https://ibis-project.org/backends/Snowflake/ for details | required | |

| database | A Snowflake database and a Snowflake schema, separated by a /. See https://ibis-project.org/backends/Snowflake/ for details |

required | |

| password | Password. If empty or None then authenticator must be passed. |

required | |

| authenticator | String indicating authentication method. See https://docs.snowflake.com/en/developer-guide/python-connector/python-connector-example#connecting-with-oauth for details. Note that the authentication flow will not take place until a database connection is made. This means that ibis.snowflake.connect(...) can succeed, while subsequent API calls fail if the authentication fails for any reason. |

required | |

| create_object_udfs | bool | Enable object UDF extensions defined by Ibis on the first connection to the database. | True |

| kwargs | Any | Additional arguments passed to the DBAPI connection call. | {} |

In addition to ibis.snowflake.connect, you can also connect to Snowflake by passing a properly-formatted Snowflake connection URL to ibis.connect:

con = ibis.connect(f"snowflake://{user}:{password}@{account}/{database}")Authenticating with SSO

Ibis supports connecting to SSO-enabled Snowflake warehouses using the authenticator parameter.

You can use it in the explicit-parameters-style or in the URL-style connection APIs. All values of authenticator are supported.

Explicit

con = ibis.snowflake.connect(

user="user",

account="safpqpq-sq55555",

database="my_database",

schema="my_schema",

warehouse="my_warehouse",

authenticator="externalbrowser",

)URL

con = ibis.connect(

f"snowflake://{user}@{account}/{database}/{schema}?warehouse={warehouse}",

authenticator="externalbrowser",

)Authenticating with Key Pair Authentication

Ibis supports connecting to Snowflake warehouses using private keys.

You can use it in the explicit-parameters-style or in the URL-style connection APIs.

Explicit

con = ibis.snowflake.connect(

user="user",

account="safpqpq-sq55555",

database="my_database",

schema="my_schema",

warehouse="my_warehouse",

# extracted private key from .p8 file

private_key=os.getenv(SNOWFLAKE_PKEY),

)URL

con = ibis.connect(

f"snowflake://{user}@{account}/{database}/{schema}?warehouse={warehouse}",

private_key=os.getenv(SNOWFLAKE_PKEY),

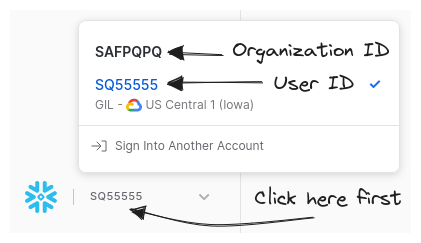

)Looking up your Snowflake organization ID and user ID

A Snowflake account identifier consists of an organization ID and a user ID, separated by a hyphen.

This user ID is not the same as the username you log in with.

To find your organization ID and user ID, log in to the Snowflake web app, then click on the text just to the right of the Snowflake logo (in the lower-left-hand corner of the screen).

The bold text at the top of the little pop-up window is your organization ID. The bold blue text with a checkmark next to it is your user ID.

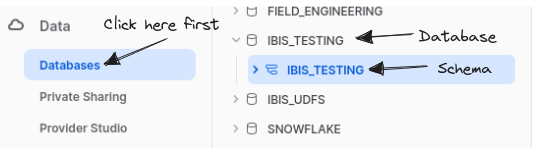

Choosing a value for database

Snowflake refers to a collection of tables as a schema, and a collection of schema as a database.

You must choose a database and a schema to connect to. You can refer to the available databases and schema in the “Data” sidebar item in the Snowflake web app.

snowflake.Backend

compile

compile(self, expr, /, *, limit=None, params=None, pretty=False)

Compile an expression to a SQL string.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | An ibis expression to compile. | required |

| limit | int | None | An integer to effect a specific row limit. A value of None means no limit. |

None |

| params | Mapping[ir.Expr, Any] | None | Mapping of scalar parameter expressions to value. | None |

| pretty | bool | Pretty print the SQL query during compilation. | False |

Returns

| Name | Type | Description |

|---|---|---|

| str | Compiled expression |

connect

connect(self, *args, **kwargs)

Connect to the database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| *args | Mandatory connection parameters, see the docstring of do_connect for details. |

() |

|

| **kwargs | Extra connection parameters, see the docstring of do_connect for details. |

{} |

Notes

This creates a new backend instance with saved args and kwargs, then calls reconnect and finally returns the newly created and connected backend instance.

Returns

| Name | Type | Description |

|---|---|---|

| BaseBackend | An instance of the backend |

create_catalog

create_catalog(self, name, /, *, force=False)

Create a new catalog.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Name of the new catalog. | required |

| force | bool | If False, an exception is raised if the catalog already exists. |

False |

create_database

create_database(self, name, /, *, catalog=None, force=False)

Create a database named name in catalog.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Name of the database to create. | required |

| catalog | str | None | Name of the catalog in which to create the database. If None, the current catalog is used. |

None |

| force | bool | If False, an exception is raised if the database exists. |

False |

create_table

create_table(self, name, /, obj=None, *, schema=None, database=None, temp=False, overwrite=False, comment=None)

Create a table in Snowflake.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Name of the table to create | required |

| obj | ir.Table | pd.DataFrame | pa.Table | pl.DataFrame | pl.LazyFrame | None | The data with which to populate the table; optional, but at least one of obj or schema must be specified |

None |

| schema | sch.IntoSchema | None | The schema of the table to create; optional, but at least one of obj or schema must be specified |

None |

| database | str | None | The name of the database in which to create the table; if not passed, the current database is used. | None |

| temp | bool | Create a temporary table | False |

| overwrite | bool | If True, replace the table if it already exists, otherwise fail if the table exists |

False |

| comment | str | None | Add a comment to the table | None |

create_view

create_view(self, name, /, obj, *, database=None, overwrite=False)

Create a view from an Ibis expression.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | The name of the view to create. | required |

| obj | ir.Table | The Ibis expression to create the view from. | required |

| database | str | None | The database that the view should be created in. | None |

| overwrite | bool | If True, replace an existing view with the same name. |

False |

Returns

| Name | Type | Description |

|---|---|---|

| ir.Table | A table expression representing the view. |

disconnect

disconnect(self)

Disconnect from the backend.

drop_catalog

drop_catalog(self, name, /, *, force=False)

Drop a catalog with name name.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Catalog to drop. | required |

| force | bool | If False, an exception is raised if the catalog does not exist. |

False |

drop_database

drop_database(self, name, /, *, catalog=None, force=False)

Drop the database with name in catalog.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Name of the schema to drop. | required |

| catalog | str | None | Name of the catalog to drop the database from. If None, the current catalog is used. |

None |

| force | bool | If False, an exception is raised if the database does not exist. |

False |

drop_table

drop_table(self, name, /, *, database=None, force=False)

Drop a table from the backend.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | The name of the table to drop | required |

| database | tuple[str, str] | str | None | The database that the table is located in. | None |

| force | bool | If True, do not raise an error if the table does not exist. |

False |

drop_view

drop_view(self, name, /, *, database=None, force=False)

Drop a view from the backend.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | The name of the view to drop. | required |

| database | str | None | The database that the view is located in. | None |

| force | bool | If True, do not raise an error if the view does not exist. |

False |

execute

execute(self, expr, /, *, params=None, limit=None, **kwargs)

Execute an Ibis expression and return a pandas DataFrame, Series, or scalar.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to execute. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. The default is in ibis/config.py. |

None |

| kwargs | Any | Keyword arguments | {} |

Returns

| Name | Type | Description |

|---|---|---|

| DataFrame | Series | scalar | The result of the expression execution. |

from_connection

from_connection(cls, con, /, *, create_object_udfs=True)

Create an Ibis Snowflake backend from an existing connection.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| con | snowflake.connector.SnowflakeConnection | snowflake.snowpark.Session | A Snowflake Connector for Python connection or a Snowpark session instance. | required |

| create_object_udfs | bool | Enable object UDF extensions defined by Ibis on the first connection to the database. | True |

Returns

| Name | Type | Description |

|---|---|---|

| Backend | An Ibis Snowflake backend instance. |

Examples

>>> import ibis

>>> ibis.options.interactive = True

>>> import snowflake.snowpark as sp

>>> session = sp.Session.builder.configs(...).create()

>>> con = ibis.snowflake.from_connection(session)

>>> batting = con.tables.BATTING

>>> batting[["playerID", "RBI"]].head()

┏━━━━━━━━━━━┳━━━━━━━┓

┃ playerID ┃ RBI ┃

┡━━━━━━━━━━━╇━━━━━━━┩

│ string │ int64 │

├───────────┼───────┤

│ abercda01 │ 0 │

│ addybo01 │ 13 │

│ allisar01 │ 19 │

│ allisdo01 │ 27 │

│ ansonca01 │ 16 │

└───────────┴───────┘from_snowpark

from_snowpark(cls, session, *, create_object_udfs=True)

Create an Ibis Snowflake backend from a Snowpark session.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| session | snowflake.snowpark.Session | A Snowpark session instance. | required |

| create_object_udfs | bool | Enable object UDF extensions defined by Ibis on the first connection to the database. | True |

Returns

| Name | Type | Description |

|---|---|---|

| Backend | An Ibis Snowflake backend instance. |

Examples

>>> import ibis

>>> ibis.options.interactive = True

>>> import snowflake.snowpark as sp

>>> session = sp.Session.builder.configs(...).create()

>>> con = ibis.snowflake.from_snowpark(session)

>>> batting = con.tables.BATTING

>>> batting[["playerID", "RBI"]].head()

┏━━━━━━━━━━━┳━━━━━━━┓

┃ playerID ┃ RBI ┃

┡━━━━━━━━━━━╇━━━━━━━┩

│ string │ int64 │

├───────────┼───────┤

│ abercda01 │ 0 │

│ addybo01 │ 13 │

│ allisar01 │ 19 │

│ allisdo01 │ 27 │

│ ansonca01 │ 16 │

└───────────┴───────┘get_schema

get_schema(self, table_name, *, catalog=None, database=None)

has_operation

has_operation(cls, operation, /)

Return whether the backend supports the given operation.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| operation | type[ops.Value] | Operation type, a Python class object. | required |

insert

insert(self, name, /, obj, *, database=None, overwrite=False)

Insert data into a table.

schema to refer to database hierarchy.

A collection of tables is referred to as a database. A collection of database is referred to as a catalog. These terms are mapped onto the corresponding features in each backend (where available), regardless of whether the backend itself uses the same terminology.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | The name of the table to which data needs will be inserted | required |

| obj | IntoMemtable | ir.Table | The source data or expression to insert | required |

| database | str | None | Name of the attached database that the table is located in. For multi-level table hierarchies, you can pass in a dotted string path like "catalog.database" or a tuple of strings like ("catalog", "database"). |

None |

| overwrite | bool | If True then replace existing contents of table |

False |

list_catalogs

list_catalogs(self, *, like=None)

List existing catalogs in the current connection.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| like | str | None | A pattern in Python’s regex format to filter returned catalog names. | None |

Returns

| Name | Type | Description |

|---|---|---|

| list[str] | The catalog names that exist in the current connection, that match the like pattern if provided. |

list_databases

list_databases(self, *, like=None, catalog=None)

List existing databases in the current connection.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of the terminology the backend uses.

See the Table Hierarchy Concepts Guide for more info.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| like | str | None | A pattern in Python’s regex format to filter returned database names. | None |

| catalog | str | None | The catalog to list databases from. If None, the current catalog is searched. |

None |

Returns

| Name | Type | Description |

|---|---|---|

| list[str] | The database names that exist in the current connection, that match the like pattern if provided. |

list_tables

list_tables(self, *, like=None, database=None)

The table names that match like in the given database.

For some backends, the tables may be files in a directory, or other equivalent entities in a SQL database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| like | str | None | A pattern in Python’s regex format. | None |

| database | tuple[str, str] | str | None | The database, or (catalog, database) from which to list tables. For backends that support a single-level table hierarchy, you can pass in a string like "bar". For backends that support multi-level table hierarchies, you can pass in a dotted string path like "catalog.database" or a tuple of strings like ("catalog", "database"). If not provided, the current database (and catalog, if applicable for this backend) is used. See the Table Hierarchy Concepts Guide for more info. |

None |

Returns

| Name | Type | Description |

|---|---|---|

| list[str] | The list of the table names that match the pattern like. |

Examples

This example uses the DuckDB backend, but the list_tables API works the same for other backends.

>>> import ibis

>>> con = ibis.duckdb.connect()

>>> foo = con.create_table("foo", schema=ibis.schema(dict(a="int")))

>>> con.list_tables()

['foo']

>>> bar = con.create_view("bar", foo)

>>> con.list_tables()

['bar', 'foo']

>>> con.create_database("my_database")

>>> con.list_tables(database="my_database")

[]

>>> con.raw_sql("CREATE TABLE my_database.baz (a INTEGER)")

<duckdb.duckdb.DuckDBPyConnection object at 0x...>

>>> con.list_tables(database="my_database")

['baz']raw_sql

raw_sql(self, query, **kwargs)

read_csv

read_csv(self, path, /, *, table_name=None, **kwargs)

Register a CSV file as a table in the Snowflake backend.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| path | str | Path | A string or Path to a CSV file; globs are supported | required |

| table_name | str | None | Optional name for the table; if not passed, a random name will be generated | None |

| kwargs | Any | Snowflake-specific file format configuration arguments. See the documentation for the full list of options: https://docs.snowflake.com/en/sql-reference/sql/create-file-format#type-csv | {} |

Returns

| Name | Type | Description |

|---|---|---|

| Table | The table that was read from the CSV file |

read_delta

read_delta(self, path, /, *, table_name=None, **kwargs)

Register a Delta Lake table in the current database.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| path | str | Path | The data source. Must be a directory containing a Delta Lake table. | required |

| table_name | str | None | An optional name to use for the created table. This defaults to a sequentially generated name. | None |

| **kwargs | Any | Additional keyword arguments passed to the underlying backend or library. | {} |

Returns

| Name | Type | Description |

|---|---|---|

| ir.Table | The just-registered table. |

read_json

read_json(self, path, /, *, table_name=None, **kwargs)

Read newline-delimited JSON into an ibis table, using Snowflake.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| path | str | Path | A string or Path to a JSON file; globs are supported | required |

| table_name | str | None | Optional table name | None |

| kwargs | Any | Additional keyword arguments. See https://docs.snowflake.com/en/sql-reference/sql/create-file-format#type-json for the full list of options. | {} |

Returns

| Name | Type | Description |

|---|---|---|

| Table | An ibis table expression |

read_parquet

read_parquet(self, path, /, *, table_name=None, **kwargs)

Read a Parquet file into an ibis table, using Snowflake.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| path | str | Path | A string or Path to a Parquet file; globs are supported | required |

| table_name | str | None | Optional table name | None |

| kwargs | Any | Additional keyword arguments. See https://docs.snowflake.com/en/sql-reference/sql/create-file-format#type-parquet for the full list of options. | {} |

Returns

| Name | Type | Description |

|---|---|---|

| Table | An ibis table expression |

reconnect

reconnect(self)

Reconnect to the database already configured with connect.

register_options

register_options(cls)

Register custom backend options.

rename_table

rename_table(self, old_name, new_name)

Rename an existing table.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| old_name | str | The old name of the table. | required |

| new_name | str | The new name of the table. | required |

sql

sql(self, query, /, *, schema=None, dialect=None)

Create an Ibis table expression from a SQL query.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| query | str | A SQL query string | required |

| schema | IntoSchema | None | The schema of the query. If not provided, Ibis will try to infer the schema of the query. | None |

| dialect | str | None | The SQL dialect of the query. If not provided, the backend’s dialect is assumed. This argument can be useful when the query is written in a different dialect from the backend. | None |

Returns

| Name | Type | Description |

|---|---|---|

| ir.Table | The table expression representing the query |

table

table(self, name, /, *, database=None)

to_csv

to_csv(self, expr, /, path, *, params=None, **kwargs)

Write the results of executing the given expression to a CSV file.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Table | The ibis expression to execute and persist to CSV. | required |

| path | str | Path | The data source. A string or Path to the CSV file. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| kwargs | Any | Additional keyword arguments passed to pyarrow.csv.CSVWriter | {} |

| https | required |

to_delta

to_delta(self, expr, /, path, *, params=None, **kwargs)

Write the results of executing the given expression to a Delta Lake table.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Table | The ibis expression to execute and persist to Delta Lake table. | required |

| path | str | Path | The data source. A string or Path to the Delta Lake table. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| kwargs | Any | Additional keyword arguments passed to deltalake.writer.write_deltalake method | {} |

to_json

to_json(self, expr, /, path, **kwargs)

Write the results of expr to a json file of [{column -> value}, …] objects.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Table | The ibis expression to execute and persist to Delta Lake table. | required |

| path | str | Path | The data source. A string or Path to the Delta Lake table. | required |

| kwargs | Any | Additional, backend-specifc keyword arguments. | {} |

to_pandas

to_pandas(self, expr, /, *, params=None, limit=None, **kwargs)

Execute an Ibis expression and return a pandas DataFrame, Series, or scalar.

This method is a wrapper around execute.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to execute. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. The default is in ibis/config.py. |

None |

| kwargs | Any | Keyword arguments | {} |

to_pandas_batches

to_pandas_batches(self, expr, /, *, params=None, limit=None, chunk_size=1000000)

Execute an Ibis expression and return an iterator of pandas DataFrames.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to execute. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. The default is in ibis/config.py. |

None |

| chunk_size | int | Maximum number of rows in each returned DataFrame batch. This may have no effect depending on the backend. |

1000000 |

| kwargs | Any | Keyword arguments | {} |

Returns

| Name | Type | Description |

|---|---|---|

| Iterator[pd.DataFrame] | An iterator of pandas DataFrames. |

to_parquet

to_parquet(self, expr, /, path, *, params=None, **kwargs)

Write the results of executing the given expression to a parquet file.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Table | The ibis expression to execute and persist to parquet. | required |

| path | str | Path | The data source. A string or Path to the parquet file. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| **kwargs | Any | Additional keyword arguments passed to pyarrow.parquet.ParquetWriter | {} |

| https | required |

to_parquet_dir

to_parquet_dir(self, expr, /, directory, *, params=None, **kwargs)

Write the results of executing the given expression to a parquet file in a directory.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Table | The ibis expression to execute and persist to parquet. | required |

| directory | str | Path | The data source. A string or Path to the directory where the parquet file will be written. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| **kwargs | Any | Additional keyword arguments passed to pyarrow.dataset.write_dataset | {} |

| https | required |

to_polars

to_polars(self, expr, /, *, params=None, limit=None, **kwargs)

Execute expression and return results in as a polars DataFrame.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to export to polars. | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. The default is in ibis/config.py. |

None |

| kwargs | Any | Keyword arguments | {} |

Returns

| Name | Type | Description |

|---|---|---|

| dataframe | A polars DataFrame holding the results of the executed expression. |

to_pyarrow

to_pyarrow(self, expr, /, *, params=None, limit=None, **kwargs)

Execute expression to a pyarrow object.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to export to pyarrow | required |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. The default is in ibis/config.py. |

None |

| kwargs | Any | Keyword arguments | {} |

Returns

| Name | Type | Description |

|---|---|---|

| result | If the passed expression is a Table, a pyarrow table is returned. If the passed expression is a Column, a pyarrow array is returned. If the passed expression is a Scalar, a pyarrow scalar is returned. |

to_pyarrow_batches

to_pyarrow_batches(self, expr, /, *, params=None, limit=None, chunk_size=1000000, **kwargs)

Execute expression and return an iterator of PyArrow record batches.

This method is eager and will execute the associated expression immediately.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to export to pyarrow | required |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means “no limit”. The default is in ibis/config.py. |

None |

| params | Mapping[ir.Scalar, Any] | None | Mapping of scalar parameter expressions to value. | None |

| chunk_size | int | Maximum number of rows in each returned record batch. | 1000000 |

Returns

| Name | Type | Description |

|---|---|---|

| RecordBatchReader | Collection of pyarrow RecordBatchs. |

to_torch

to_torch(self, expr, /, *, params=None, limit=None, **kwargs)

Execute an expression and return results as a dictionary of torch tensors.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| expr | ir.Expr | Ibis expression to execute. | required |

| params | Mapping[ir.Scalar, Any] | None | Parameters to substitute into the expression. | None |

| limit | int | str | None | An integer to effect a specific row limit. A value of None means no limit. |

None |

| kwargs | Any | Keyword arguments passed into the backend’s to_torch implementation. |

{} |

Returns

| Name | Type | Description |

|---|---|---|

| dict[str, torch.Tensor] | A dictionary of torch tensors, keyed by column name. |

truncate_table

truncate_table(self, name, /, *, database=None)

Delete all rows from a table.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of whether the backend itself uses the same terminology.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | Table name | required |

| database | str | tuple[str, str] | None | Name of the attached database that the table is located in. For backends that support multi-level table hierarchies, you can pass in a dotted string path like "catalog.database" or a tuple of strings like ("catalog", "database"). |

None |

upsert

upsert(self, name, /, obj, on, *, database=None)

Upsert data into a table.

schema to refer to database hierarchy.

A collection of table is referred to as a database. A collection of database is referred to as a catalog.

These terms are mapped onto the corresponding features in each backend (where available), regardless of whether the backend itself uses the same terminology.

Parameters

| Name | Type | Description | Default |

|---|---|---|---|

| name | str | The name of the table to which data will be upserted | required |

| obj | ir.Table | IntoMemtable | The source data or expression to upsert | required |

| on | str | Column name to join on | required |

| database | str | None | Name of the attached database that the table is located in. For backends that support multi-level table hierarchies, you can pass in a dotted string path like "catalog.database" or a tuple of strings like ("catalog", "database"). |

None |